Big Data, small task, small data, Big Task: how two different approaches to AI might come together

Mainstream AI is a black box, big data gas guzzler for doing small tasks. Frugal, transparent small data AI can do big tasks but needs a lot of help.

This week an article in The Guardian caught my eye. It was about a distinguished university professor who had left his highly-paid job in the US to lead an AI institute in his home country China.

His name is Song-Chun Zhu and he would be a household name like the AI gods of the US if not for his field of study within AI. Because that is a fundamentally different approach than mainstream AI.

Mainstream AI is statistical. Using a huge corpus of data, LLM’s are trained to predict which word should follow another. Pattern recognition and probability is used to generate text that appears coherent and relevant, but scientists can’t quite explain it.

Song-Chun Zhu and his school approach AI very differently. They argue that LLMs are black boxes that just parrot language but with no understanding. Instead, says Zhu, AI should develop intent-driven, raven-like intelligence, that we can explain and trust.

Zhu’s teams are mapping objects, scenes, actions, and events; objects in 3D space, how they change in time, and how changes cause effects. The result: the Spatial-Temporal-Causation And-Or Graph, an STC-AOG.

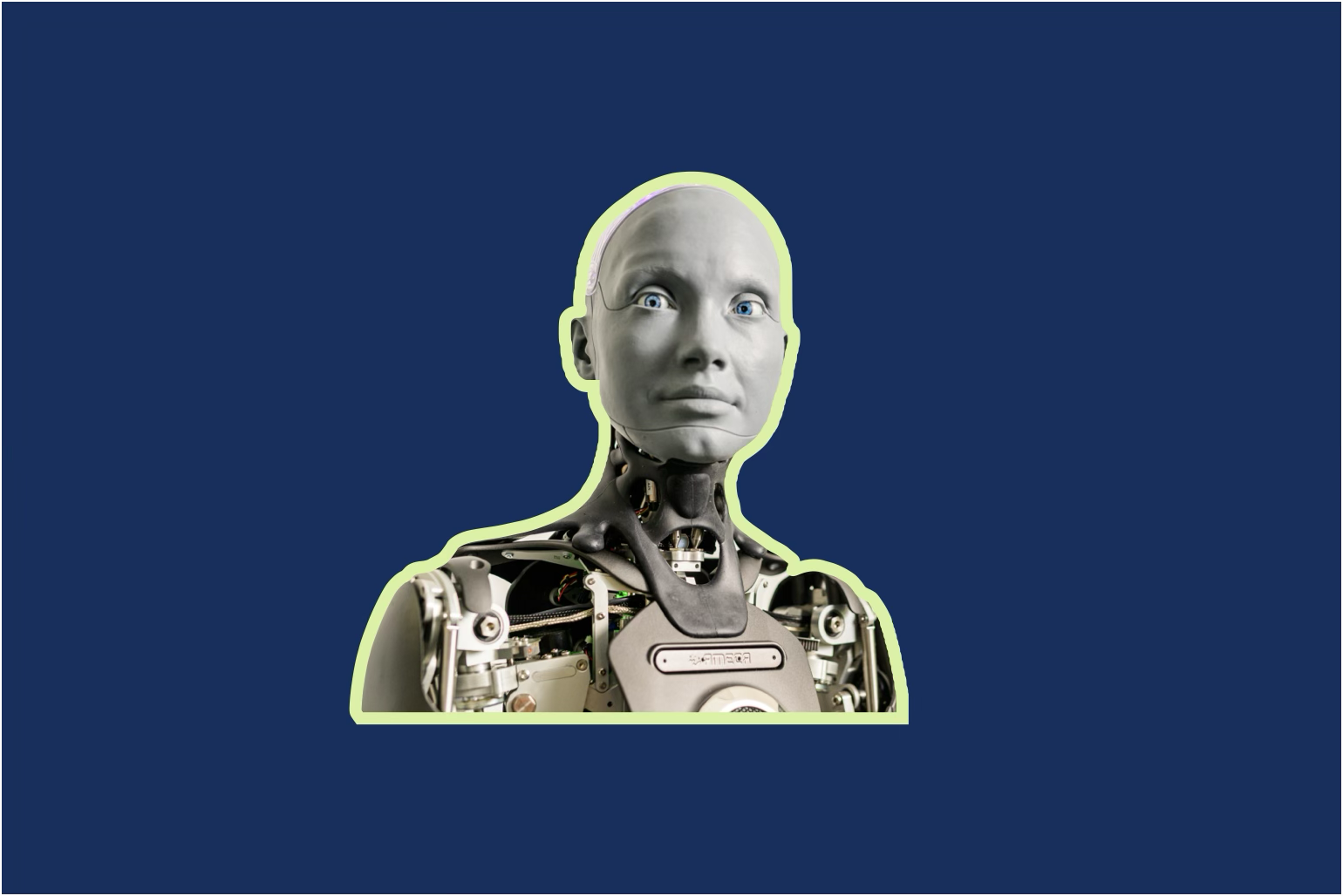

Very much suited to robotics, and in fact, from robotics come the most illuminating examples, such as a robot manipulating a cup of tea.

Actions are broken down into parts: recognise the cup (object), its location (spatial), the action of grasping (temporal), and the effect of lifting it (causal).

This is “small data” as opposed to “big data” because relatively small amounts of data are used. It only takes 100-200 data points for the cup of tea example. Better still, the robot can learn from just a few examples, much like a human.

Compare this to big data. Billions of data points needed.

And, answering even a simple question using an LLM costs as much compute energy as a 60W light bulb on for 30 mins. In contrast, the cup of tea example would expend 1/20th of that energy, most of which is for motion, not compute.

However, STC is much more domain-specific than LLMs, therefore much less scalable. The teacup example? 3 Weeks for a team of 3.

Which is why STC-AOG is mostly experimental. But that will change.

The big problem with mainstream AI is lack of accountability. We don’t know how they work exactly, so they are unacceptable in areas like healthcare and finance. For regulatory compliance or safety-critical applications we need Explainable AI.

Explainable, like STC-AOG.

But what about scalability? Simple, actually. Employ LLMs to create the STC graphs. Energy costs for building graphs will be higher, but production will be far more scalable.

In such a hybrid system we would use LLMs for high-level planning with STC-AOG for execution. The LLM would generate the STC-AOG rules at one-time cost. We deploy the STC-AOG on a robot for thousands of tasks at a tiny 1–5 Wh each.

As it appears, hybrid is the future.